Historian puts new spin on scientific revolution

When Columbus discovered America, European culture hadn’t yet grasped the concept of discovery. Various languages had verbs that could be translated as discover, but only in the sense of discovering things like a worm under a rock. Scholars operated within a worldview that all knowledge had been articulated by the ancients, such as Ptolemy, the astronomer who compiled the mathematical details of the Earth-centered universe. As it happened, Ptolemy was also the greatest of ancient geographers. So when Columbus showed that Ptolemy’s grasp on geography was flawed, it opened the way for Copernicus to challenge Ptolemy on his picture of the cosmos as well. Deep thinkers who were paying attention then realized that nature possessed secrets for humankind to “discover.”

“The existence of the idea of discovery is a necessary precondition for science,” writes historian David Wootton. “The discovery of America in 1492 created a new enterprise that intellectuals could engage in: the discovery of new knowledge.”

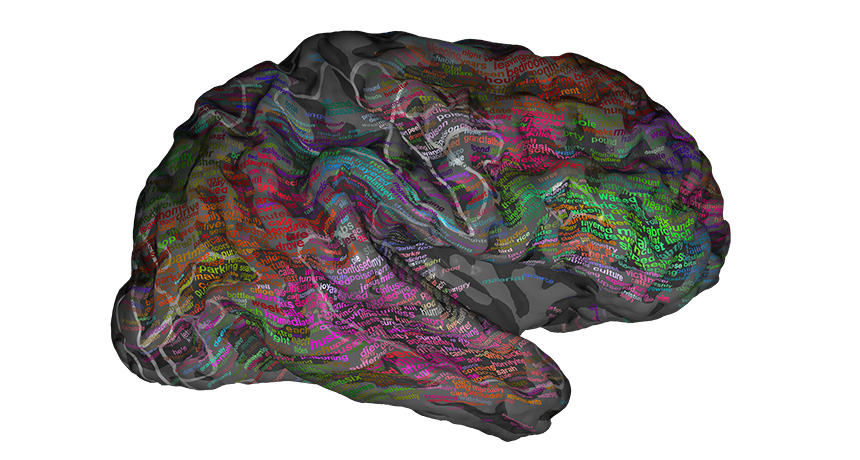

Appreciating the concept of discovery was not enough to instigate the invention of science. The arrival of the printing press in the mid-15th century was also especially essential. It standardized and magnified the ability of scholars to disseminate knowledge, enabling the growth of communities, cooperation and competition. Late medieval artists’ development of geometrical principles underlying perspective in paintings also provided important mathematical insights. Other key concepts (like discovery) required labeling and clarifying, among them the idea of “evidence.”

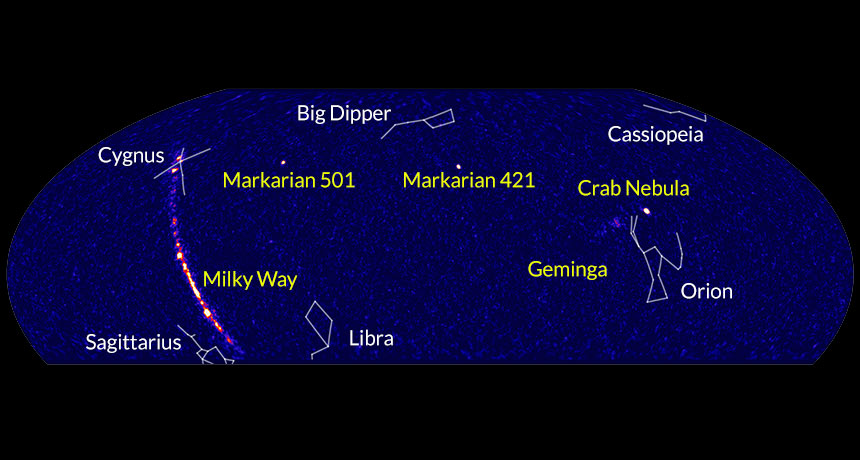

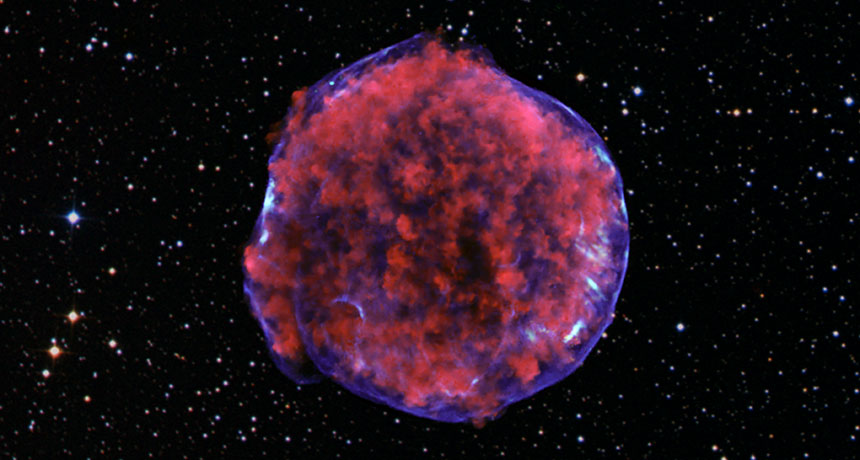

And modern science’s birth required a trigger, a good candidate being the supernova observed by Tycho Brahe in 1572. Suddenly, the heavens became changeable, contradicting the Aristotelian dogma of eternal changeless perfection in the sky. Tycho’s exploding star did not cause the scientific revolution, Wootton avers, but it did announce the revolution’s beginning.

In The Invention of Science, Wootton incorporates these insights into an idiosyncratic but deeply thoughtful account of the rise of science, disagreeing frequently with mainstream science historians and philosophers. He especially scorns the relativists who contend that different scientific views are all mere social constructions such that no one is better than any other. Wootton agrees that approaches to science may be socially influenced in their construction, but nevertheless the real world constrains the success of any given approach.

Wootton’s book offers a fresh approach to the history of science with details not usually encountered in the standard accounts. It might not be the last or even best word in understanding modern science’s origins or practice, but it certainly has identified aspects that, if ignored, would leave an inadequate picture, lacking important perspective.